A Deep Dive into Production Alarms using Machine Learning

The logging of historical process data in industrial automation systems has been widely mandated by regulatory bodies for a couple of decades. Its purpose serves to prove a business’ operating compliance with local codes and regulations and for product quality control. Alarm information, however, tends to have a much shorter “shelf life” and often doesn’t even get archived. The fact is that when categorized properly and by using predictive modelling such as clustering analysis, historical alarm data can reveal very useful information about existing and potential issues with the processes.

Our Client is a successful business selling product in a highly competitive segment of the retail market space. Their processes are straightforward, they run fairly lean with a good mix of automation and support staff to run day-to-day operations. Although they have the visualization technology into their processes to address plant issues transactionally, they realized that they couldn't get a concrete understanding of the causes for their production problems and that’s when they decided to do something about it. Where to turn to?

Understanding that the way that they were correcting problems were temporary fixes but that they had data to apply advanced unsupervised machine learning algorithms, they accepted to take a leap of faith to try that different approach. As the intent was to understand the yet unlabeled data, clustering was the modelling method chosen. Clustering is a form of unsupervised Machine Learning that presupposes that very little is known about the different classes of the data.

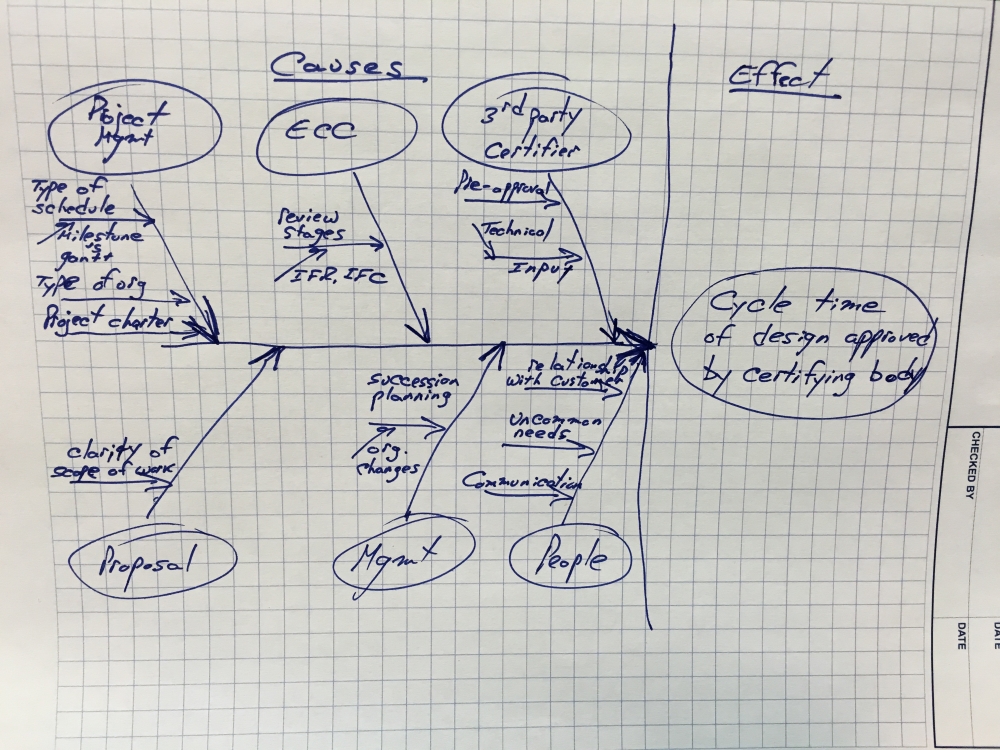

The plant alarms were clustered with two (2) main goals: The first is prediction – separating issues that require different solutions. The second is to provide a basis for analysis - identify the causes of the different types of issues. It is these two goals we had in mind when we made recommendations to this Client.

Below is an example of the method used to tease out the issues. As this type of analysis can become computationally intensive with large datasets (i.e. > 500 points), Cloud services such AWS or IBM SPSS should be considered.

Methodology Used:

Problem Statement: Systemic Causes of production problems.

Measure: Categorize alarms, obtain a representative subset of process alarms logged over a representative time period.

Analyze: Start with a higher-level view of possible quantity of representative clusters (Dendogram). Perform deeper

learning clustering analysis, such as PCA, and identify possible associations.

Implement: Identify issues and causes, consult with plant SMEs as required to validate.

Control: Design and Implement relevant Engineering Controls to address issues.

The Client benefited from this analysis as it revealed associations between data objects which were not apparent previously. An action plan was developed. Among other process-related improvements, the need to add a network-based firewall was identified to prevent suspected disruptive network activity originating outside the automation system. Environment related issues were also identified.

References:

[1] Darveau, P. Prognostics and Availability for Industrial Equipment Using High Performance Computing (HPC) and AI Technology. Preprints 2021, 2021090068 .

[2] Darveau, P., 1993. C programming in programmable logic controllers.

[3] Darveau, P., 2015. Wearable Air Quality Monitor. U.S. Patent Application 14/162,897.